I've just recently posted a new paper to biorXiv entitled "Evaluating species distribution models with discrimination accuracy is uninformative for many applications", which I did in collaboration with Nick Matzke and Teresa Iglesias. Many of you have seen me present this work at conferences over the past few years, but this is the first time it's been available in a publicly-accessible format. The conclusions of this paper are fairly problematic for SDM/ENM studies where the goal is to estimate the response of the species to a set of environmental gradients, rather than just to estimate its distribution, and so I feel like it's worthwhile to basically do a preemptive breakdown and FAQ about what we are, and aren't, saying with this one, and to try to respond to some obvious questions it raises.

Main conclusion: Using a very large set of simulations, we find that using discrimination accuracy (AUC/TSS/kappa) on occurrence data is, under a broad range of conditions, not very useful for selecting models that accurately estimate the niche. Here, "accurately estimating the niche" means getting the relative suitability of habitat right. In other words, models that get your species' current spatial distribution right are not necessarily models that accurately estimate the relative suitability of habitat and vice versa. Under many realistic conditions, we simply cannot tell good niche estimates from bad ones this way.

How is this possible? People have actually known that discrimination accuracy can be largely misinformative about calibration for quite some time, and this has even been brought up multiple times before in the SDM/ENM literature. We're not really demonstrating a new problem here, so much as demonstrating the scope of a known problem.

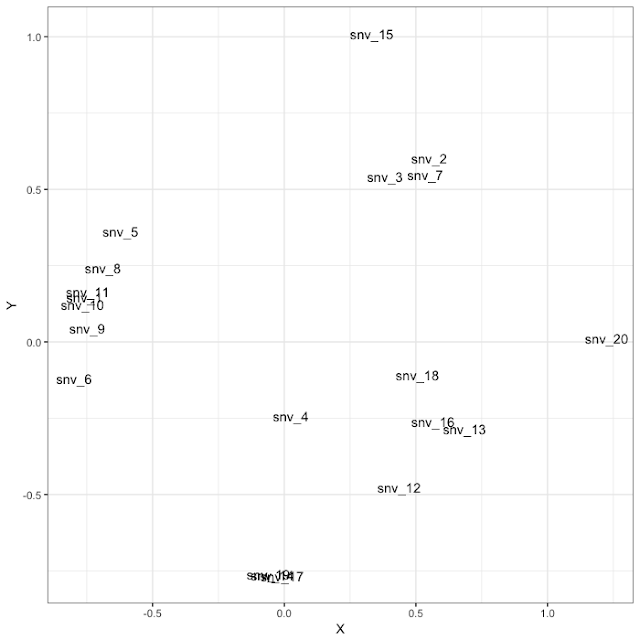

As for how this is possible, consider the following plot:

Here we've got a bunch of presence and absence (or background/pseudoabsence) data, distributed along a one-dimensional environmental gradient. The solid lines represent six different models we could use to explain or generalize this species' distribution along that gradient. These are obviously very different models, right? The issue is that discrimination accuracy essentially asks "how well do you assign higher scores to your presence data than you do to your non-presence data?", and in this example every one of these models assigns higher scores to all of the presence points than they do to the non-presence points.

At first pass the message you might get from this is "okay, discrimination accuracy is saying some models are good that seem quite wacky, but it's also saying that some models are good that might be quite sensible". That's true, but the real issue is not just that it's assigning high discrimination accuracy to all of the models; it's actually assigning the same accuracy to all of thees models. That means that if you had these data and this set of models in hand, you would be unable to choose between them using discrimination accuracy alone; their performance is identical.

This points to the root of the problem, as we see it; if your goal is to estimate a species' continuous response to the environment, discrimination accuracy has very little signal that is useful for model selection because you may have an arbitrarily high number of possible models that have the same (or similar) discrimination accuracy.

Using methods that involve a high number of predictors and complex response functions exacerbates this problem by a substantial amount; we found that discrimination accuracy is most useful for model selection only when you have a few (less than ~4) predictors and when the true marginal suitability function is a realizable case of the modeling algorithm. This means that discrimination accuracy isn't very effective at model selection when you're using (for example) some sort of step function to approximate a smooth one.

That's the statistical argument, but there's an ecological argument to be made here as well: we know that species' spatial distributions are shaped by a number of phenomena besides their environmental niches (biotic interactions, historical biogeographic processes, etc.). This means that an accurate estimate of environmental habitat suitability will, in many cases, over- or under-estimate the actual distribution of the species. Finding that good distribution estimates sometimes aren't great niche estimates (and vice versa) therefore shouldn't be surprising from a purely biological perspective.

Does this mean my models are bad? It doesn't necessarily mean that at all! What it means is that, if you're in a spot where you have a set of candidate models that have a range of performance using discrimination accuracy, discrimination accuracy is often not useful for choosing between them. If all of your models are good niche estimates (which they might be), this may just mean that you didn't necessarily pick the best niche estimate out of the batch.

It does have broader implications for the methodological literature, however. Over the past few decades, we have developed a set of "best practices" for SDM/ENM studies. These "best practices" have been favored largely based on studies where people quantify models' ability to predict withheld occurrence data via some measure of discrimination accuracy. Our results imply that those "best practices" may only be best practices when the goal is to estimate species' distributions, and we may need to entirely reevaluate which of those methods work best for making good niche estimates.

Is it possible that you just screwed up the simulations? It's always possible, but we did a lot of checking and double-checking to make sure our simulations do what they say on the tin. Honestly the results are worrying enough that I would be quite happy to find out that this is all just due to me screwing up the simulation at some point that I haven't managed to find yet. I will say, however, that I've recently been doing an entirely separate set of simulations for another purpose using entirely different methods of simulating species and occurrence data, and have found very similar results.

But you didn't use method X (where X = some method of choosing predictors, selecting study area, favored algorithm, etc.)! This is a comment that seems to pop up every time this work is presented at a meeting or seminar. It's true that we didn't explore every possible combination of methods in our analyses, and model construction was basically automated. We did try to explore a pretty decent space of common methods though, including seven different algorithms, two different sizes of study region, spatially partitioned vs. random model validation, and varying levels of model and niche complexity. Some of it seemed to make a bit of difference, but the only thing we could find that seemed to really improve things markedly was making models with few predictors, and making models with biologically realistic response functions (i.e., those that matched the true niche).

As a result of exploring all of those combinations of approaches, this study involved the construction of many thousands of models, and we can't really do that in a hands-on fashion. "It's a lot of work" isn't really a great argument, though, so let me clarify our reasoning on this topic. There are a few things to note here:

- In order to explore the relationship between discrimination accuracy and our ability to estimate the niche (which we're calling "functional accuracy"), we actually need models with a range of performance on both of those axes, including some that are actually bad at one or the other, or both.

- The question we're addressing is not "can we make good niche estimates?", or "can we make good distribution estimates?", or even "how do we make the best niche and/or distribution estimates?" The question we're asking has to do with whether or not evaluating models as distribution estimates helps us pick good niche estimates. As such, using methods that have been shown to make better distribution estimates won't necessarily affect our results at all. In fact, even finding methods that make better distribution and niche estimates won't affect our results unless those methods also produce a tighter correlation between discrimination and functional accuracy. Given that we have no a priori reason to think that this is the case, along with the computation time involved, we decided the issue wasn't worth investing another year of computer time in. If, however, someone can demonstrate that there is a set of methods that produces a strong correlation between discrimination and functional accuracy I will be absolutely thrilled. One thing I think might be particularly interesting for future exploration is sample size. We kept sample size constant here at 100 (75 training, 25 testing). I suspect that higher sample sizes might expand the space of methods for which discrimination accuracy is useful in model selection, but that's a question for another day.

- Even assuming that some combination of methods does exist that produces a strong correlation between discrimination and functional accuracy, we think it's very useful to demonstrate that there's a broad swath of approaches for which those two things are not correlated. Even if it's not broken everywhere, we feel there's great value to demonstrating that it's broken in a lot of places.

- Many of the methods people in the field might be inclined to suggest exploring are considered "best practices" due to previous methodological work in the field. That's reasonable, but we'd highlight the fact that most of those methodological studies favored those methods specifically because they maximize discrimination accuracy. Given that we're showing that discrimination accuracy is largely uninformative here for selecting niche estimates, their performance in those studies is not necessarily relevant here; see point 2.

Why post on biorXiv? The paper is currently in review, and we're hopeful that it will be accepted before too long. However, I'm currently working on several projects that build on this work, and need a citation target for it. Others have asked for something they can cite too, so I decided to just go ahead and put it out there.

Where can I get the simulation code? I've got a github repo for it

here, but I'm still in the process of making it prettier and easier to understand. You're welcome to download it now, but if you wait a few days it will likely be far more compact and comprehensible.

How should I change my methods if I'm concerned about this? As stated above, to me the big take-homes are:

- Use few predictors in model construction, which you think are important in limiting the species' distribution.

- Use methods that produce biologically plausible response functions.

- Visualize marginal suitability functions, and think critically about them as biological estimates.

These suggestions basically mirror general best practices in any sort of modeling. However, to my mind we as a field (myself very much included) have often allowed ourselves to violate these common-sense principles in pursuit of models that maximize discrimination accuracy. In light of what Teresa, Nick, and I have found with these simulations, though, I think we should be much more careful about our models as niche estimates, and much less beholden to methods that make maps that are tightly fit to species' current spatial distributions.

One final note: In addition to de-emphasizing discrimination accuracy somewhat in empirical modeling studies, I hope that two things come out of this study. First, I hope that future studies will examine the performance of alternative model selection metrics. Second, I sincerely hope that this will help to emphasize how much SDM/ENM needs a robust simulation literature (see also

this excellent review). The question we examined in this study seems pretty fundamental to the field, and yet there really hadn't been much in the way of prior simulation work on it. I think there are a ton of other low-hanging fruit out there that need to be reexamined in a simulation context; we've got a field where a lot of things have been justified by verbal arguments and appeals to common sense, and we really need to figure out whether that stuff actually works.

Addenda:

From Twitter, paraphrased: Why not evaluate other methods of measuring model fit (e.g., calibration)? In this study, our goal was just to look at the most commonly used metrics of model fit in the literature, and so we stuck with discrimination accuracy on occurrence points. People do measure model calibration and other aspects of model performance, but they're a tiny fraction of the published studies. I do strongly suspect that calibration will be a much better tool for model selection when the goal is to estimate the niche, and we should definitely look at that soon.